Pricing | 69€

/ month | 63€

/ month |

Features | a | a |

AI moderation accuracy | High – based on word meanings | Low – based on keywords |

Human oversight for AI moderation | ||

Human moderation | ||

Custom moderation

settings (not keywords) | ||

Moderating images, gifs,

links, stickers, emojis | ||

Moderating ads | ||

Comments liking | ||

Hate Comment Offender Watchlist | ||

Integrated Social Media Platforms | a | a |

Youtube | ||

Facebook | ||

Instagram | ||

Disqus | ||

TikTok | ||

API | ||

Languages | a | a |

English | ||

Spanish | ||

German | ||

Polish | ||

Czech | ||

Slovak | ||

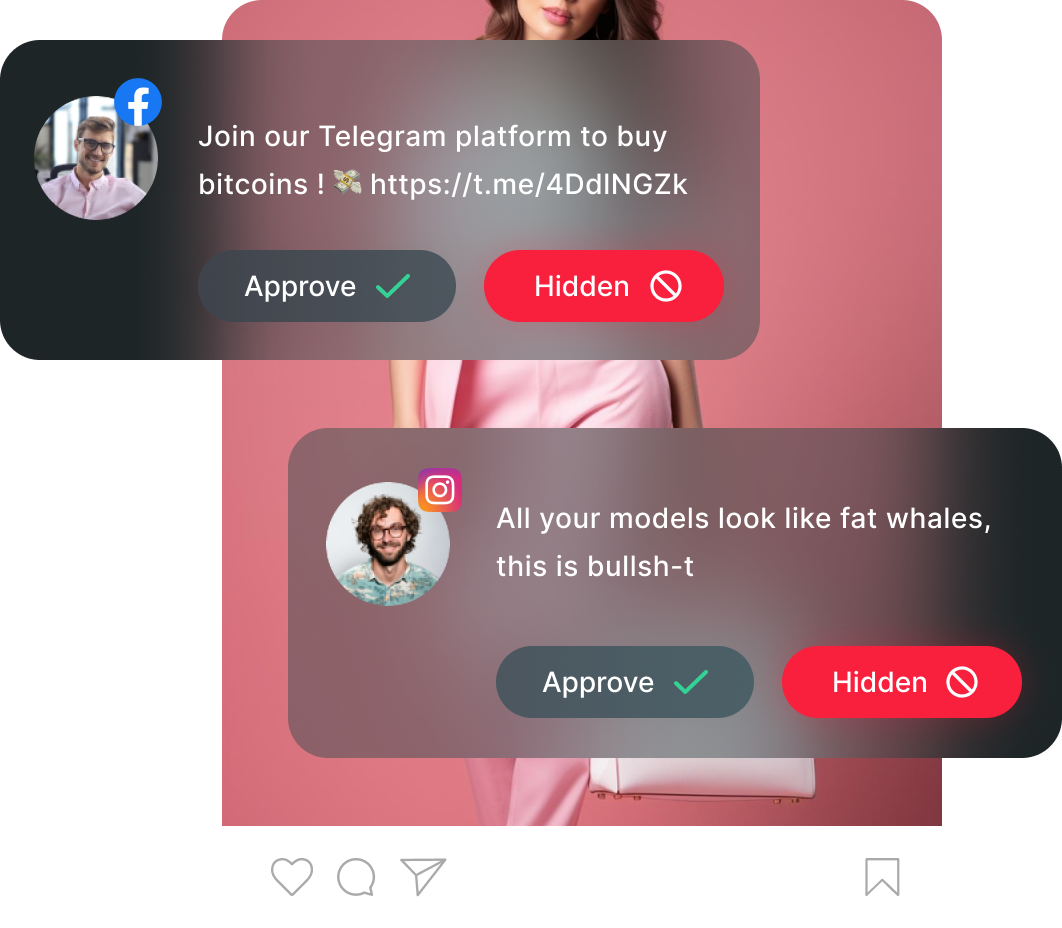

The rules were created based on the existing community guidelines from Meta and are also based on the current DSA legislation. All our trained moderators, as well as the AI model, follow the moderation manual. We hide comments if they show the following characteristics:

1. Vulgarisms

Comments containing rude and pejorative words together with the “censored” form of these expressions – K.K.T, p*ča, etc.

2. Insults

They target specific people and specific groups of people. These include, for example, various dehumanizing expressions that compare people to animals, diseases, objects, dirt and similar terms. We also include racism and comments attacking gender or orientation.

3. Violence

Comments that approve violent acts, or by their content encourage the execution of these acts, or threaten the person concerned in some way.

4. Hoaxes, misinformation, harmful stereotypes

Comments that in a certain way try to obfuscate, refute, spread false news about events, about the course of which we already have verified information. This also includes classic conspiracy theories, stereotypes, and myths that attack specific groups of people (for example, Jews or Roma).

5. SCAMs, frauds

This group includes those comments that are published by various bots or fraudsters for the purpose of deceiving/getting rich from ordinary users.

If we are not sure of our decision, or if we think the comment is on the edge, we always prefer to approve it.

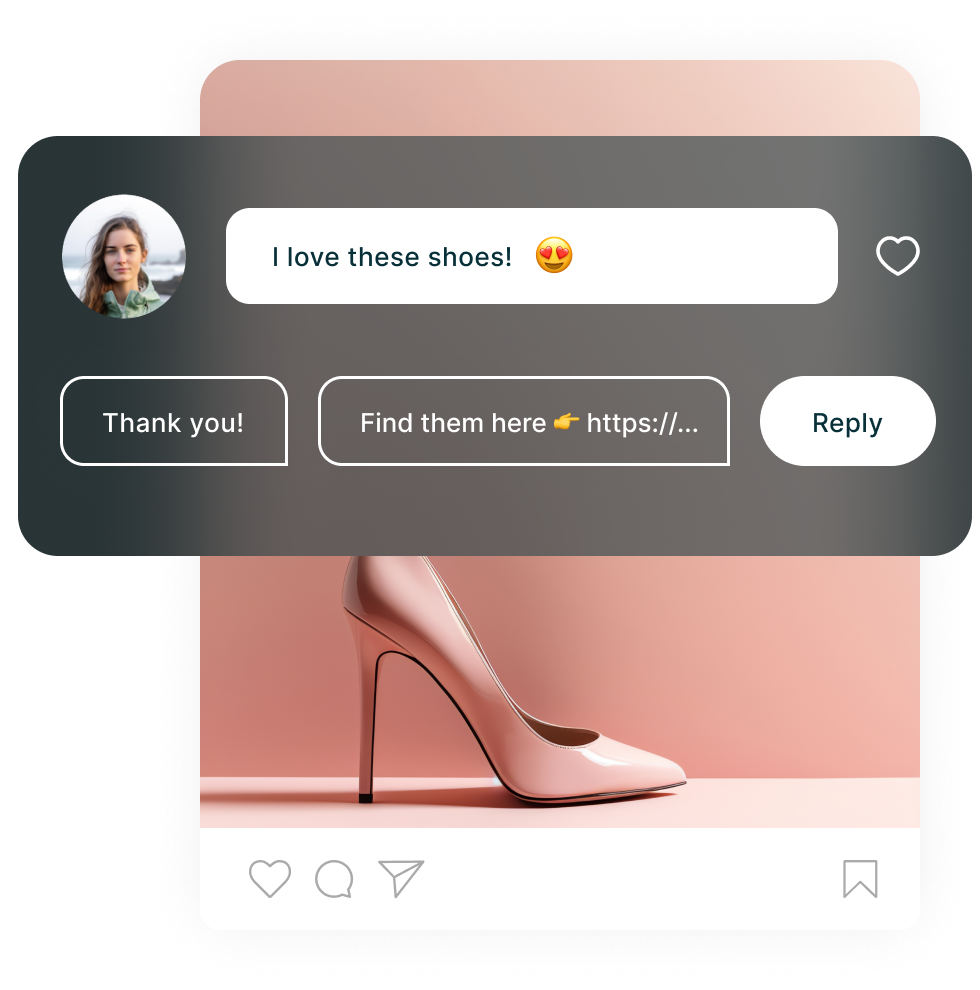

Moderation for our clients increased polite comments by encouraging more users to join the discussion. After 5 months of moderation the rte of harmful comments went from 21% to 10% and Increase in the total number of comments from 36k to 41k.

We collaborated with CulturePulse on the emotion analysis feature. CulturePulse technology focuses on more than 90 dimensions of human psychology and culture, including morality, conflict and social issues.

The basis of their AI model focuses not only on traditional machine learning processes, but also on shared evolutionary patterns that occur in different cultures. The algorithm uses over 30 years of clinically proven cognitive science to decode the beliefs that influence people’s behavior.

We fight against hoaxes and misinformation to protect brands on their social networks.