Content moderation is crucial for managing online communities. This blog explores the irreplaceable role of human content moderators, examining their unique skills compared to AI, the challenges moderation faces, and what the best solution is. 👩💻

Table of contents:

Human judgment in content moderation is essential and irreplaceable by automation. Algorithms process data quickly but lack the nuanced understanding and empathy of humans. Human moderators can interpret context, cultural nuances, and subtle cues, distinguishing between sarcasm and hate speech or humor and harassment. For example, if an influencer asked about your favourite animal, a comment like “Rats!” would be harmless. However, under a post about celebrities, politicians, journalists etc., the same comment would be offensive and toxic. AI rarely notices that; humans always do. This nuanced comprehension ensures appropriate content standards. Human judgment also offers flexibility in decision-making, addressing new social situations that don’t fit predefined rules.

If we were to share an example from practice: After the attempted assassination of the Slovak Prime Minister on May 15, 2024, AI models didn’t hide the comments celebrating this tragedy (violent comments), but our moderators did.

We discovered that under posts of pages that have used an AI-only moderation tool, the hide rate was 25%, while elv.ai’s combination of human moderators and AI detected a 51% toxicity rate.

is worth it.

According to elv.ai’s studies, a human content moderator goes through an average of 300 comments per hour. Combined with AI, the number grows significantly, to over 1,500 comments per hour. By combining the two, we have also achieved 3 great things:

“The combination of artificial intelligence and human moderators is the most effective way to moderate content, according to research.”

Mandy Lau, York University, 2022

Automated content moderation tools are invaluable for initial content screening, identifying and flagging potentially harmful content quickly. These tools can handle large volumes of data, enabling human moderators to focus on more intricate cases that require detailed analysis and contextual understanding.

Human moderators excel in analyzing complex cases requiring nuanced judgment. Their ability to understand context, cultural nuances and emotional cues and detect new types of spam and hate speech makes them indispensable for final content decisions. This focus on complex cases ensures that content moderation maintains a high standard of accuracy and fairness.

Implementing clear guidelines and regular training for both automated systems and human moderators is crucial. Consistent training ensures that moderators are well-equipped to handle diverse and evolving content, while guidelines help maintain uniformity in decision-making.

Human content moderators are essential but can be expensive due to payroll expenses associated with large teams. AI content moderation, on the other hand, can be more cost-effective depending on the organization’s requirements. The cost of AI software varies based on factors such as company preferences and the specific AI tools needed for particular tasks.

AI content moderation can regulate content faster than a human could, making it ideal for handling large volumes of content. However, human moderators are better at preserving the accuracy of content regulation when it pertains to subjective ideas. Their ability to interpret subtle differences in content, such as sarcasm or cultural references, ensures more accurate and contextually appropriate moderation.

The daily increase in user-generated content (UGC) and the presence of inappropriate topics can strain human moderators, affecting their effectiveness. Automated content moderation can handle a significant portion of the process, protecting human moderators from exposure to the most offensive material and allowing them to focus on more nuanced cases.

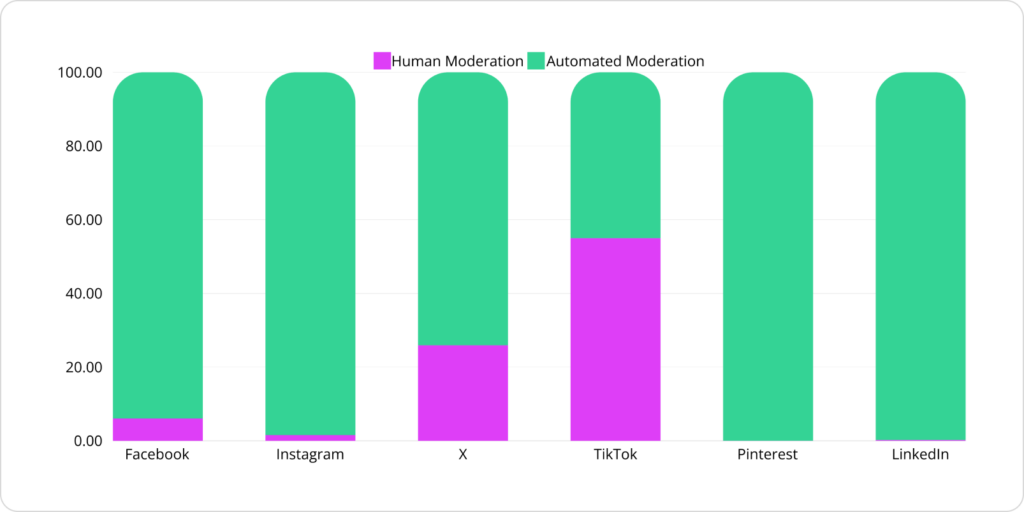

Data from DSA transparency reports reveals the extent of automated versus human moderation across major platforms. For instance, platforms like Facebook and Instagram rely heavily on automated decisions, with human moderation making up only a small percentage. However, platforms like TikTok and X (formerly Twitter) have higher rates of human moderation, highlighting the ongoing need for human oversight in content moderation. 👇

Figure: Number of automated content moderation decisions and human content moderation decisions in % declared by platforms in the first DSA transparency report.

Combining automation with human insight enhances user safety and upholds community standards. Automated tools provide the speed and efficiency needed to manage large volumes of content, while human moderators offer the nuanced understanding necessary for complex decisions. This balanced approach ensures a safer and more inclusive online environment.

Unlike most content moderator jobs, we at elv.ai offer our content moderators in-app gamification. We present to you Focus mode – a way of getting through your shift one comment at a time with just ⬅️arrow clicks➡️, like you would in a simple video game. This way, all the manual work is done as effectively as humanly possible!

No man-power is wasted on fighting with the software at elv.ai. We believe the best way to moderate is to make it as simple as we can, so all of our content moderators’ energy can be put into critical thinking and decision making. In other words: Worry less, achieve more! 🫶

Start a free trial, no credit card required, or request a free demo to learn more.

We fight against hoaxes and misinformation to protect brands on their social networks.